Not so long ago, people had no idea what a QR Code was. Today the story is different. After the scooters, the many lives during the pandemic, and several TV shows explaining what that scramble of black and white blocks in the corner of the screen was - not to mention the dishonorable mention of restaurant menus by QR Code (but already talking) - now the QR Code is pop. The curious thing is that the invention dates back to 1994.

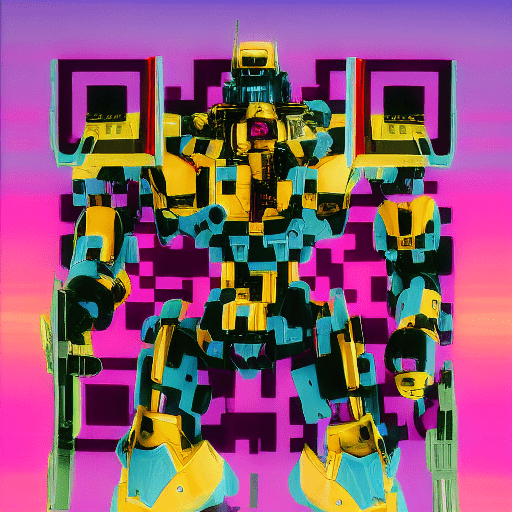

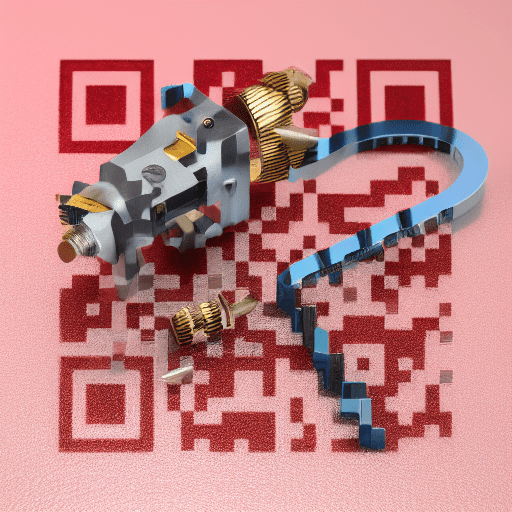

QR Codes are so versatile: they serve as links, augmented reality triggers, wi-fi connection facilitators, shortcuts to share contacts, as a form of authentication (TOTP) and have even become art objects. Go to Google Images and search for "qr code art", it's worth it.

And now, in the middle of the avalanche of Generative AI topics, we found out that you can combine real QR Codes with AI-generated art and keep them working, which is the most surprising thing. The process is not very complex, by testing some parameters and models in Stable Diffusion it is possible to obtain very interesting results.

The following collection of examples was just a first test. A mega short workflow would be the following: use a generic online QR Code generator*, attach the generated QR Code to ControlNet twice, use brightness and tile models in ControlNet, and run your local Stable Diffusion prompts.

* An important detail: there is a parameter for the generation of QR Codes that is the ECL, and for this type of experiment the ECL must be H (high). ECL comes from error correction level or error correction feature, and serves to indicate the ability of the QR Code to "resist" defects (stains, dirt, scratches) and still be read and preserve its information.

The QR Codes reading in this post was tested on the iPhone native camera, it should work on most Androids as well. Some QR Codes are harder to read, usually recognition is better at a certain distance.